Large language models (LLMs) are everywhere and they're changing how we interact with artificial intelligence (AI). AI-powered chats and generative services like ChatGPT, Claude and Gemini are especially popular.

Many companies aren’t yet getting the most out of these technologies. LLMs are often treated as standalone tools, even though they can be seamlessly integrated into existing systems — like digital workstations, portals or content management systems (CMS) — using APIs.

One exciting use case is chatting directly with your company portal using a RAG chatbot (Retrieval-Augmented Generation). This type of chatbot taps into your company’s data to deliver accurate, relevant answers in real time.

That means users can find the information they need faster. No more digging around. They just ask a question and get a clear answer.

-png-1.png?width=350&height=350&name=Design%20ohne%20Titel(5)-png-1.png)

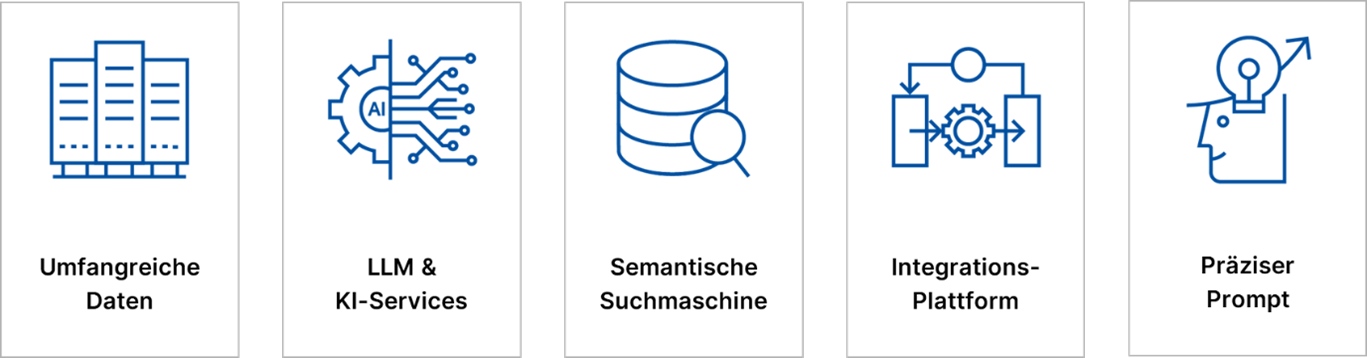

The Recipe for a Successful RAG Chatbot: 5 Key Ingredients

A RAG chatbot works like a great recipe: it all comes down to the right mix of ingredients. But instead of flour, sugar and salt, you’ll need data sources, search technology and AI services.

Here are the five essentials for building a powerful RAG chatbot and how to bring them together for the best results.

Recommendations for Architects and Decision-Makers

A RAG chatbot is like a great recipe: it takes the right mix of ingredients to get the results you want. But instead of flour, sugar and salt, you’ll need data sources, search technology and AI services.

Here are the five key ingredients you’ll need for a powerful RAG chatbot and how to bring them together for the best outcome.

Why not just use a standard chatbot?

It’s a fair question: Why not stick with a classic chatbot?

The answer is simple. Traditional chatbots are often too limited. They rely on structured, prewritten answers and only know what they've been taught. You can’t easily update them with new information, and expanding them—say, to connect new data sources or workflows—can be difficult.

Figure 1 - Ingredients for a Conversation with Your Portal

Ingredient 1: Comprehensive and Accessible Data Sources

A chatbot is only as smart as the data it can access. And that’s where the first challenge begins: Is the data even available?

Much of your company’s information is protected by user permissions. Portals like employee or service platforms hold valuable content, but not everyone can see it. So it’s important to clarify how the AI can access this data using the same permissions as the user.

You’ll also want to check if company agreements are needed to unlock certain data. These often take time to coordinate.

And don’t forget regulatory requirements. Depending on your industry, there may be rules you need to follow.

These factors lay the groundwork for everything else. If they’re not handled right, even the smartest AI won’t be able to help.

Ingredient 2: The Large Language Model (LLM)

At the heart of every RAG chatbot is the Large Language Model (LLM): the AI that generates answers based on the data it retrieves. That brings up an important question: where should the LLM run?

Cloud-based LLMs are ready to go. You don’t need your own infrastructure, and updates come automatically from the provider. That means less effort for you and better performance over time.

Here are some common cloud-based options:

- OpenAI (Azure OpenAI Service / ChatGPT Enterprise): A leader in enterprise-ready language models with powerful APIs.

- Google AI (Gemini / Vertex AI): Offers a wide set of AI tools, including advanced LLMs for business use.

Of course, if you’re working with sensitive data, you’ll want to keep data protection and compliance in mind. The good news is most cloud providers offer solid solutions for that too.

On-premises LLMs are the alternative. You run the model on your own hardware, giving you full control over your data and infrastructure. This setup works best if your organization has strict privacy or compliance requirements.

Some examples of open-source LLMs you can run locally:

- Meta (Llama 3): Highly customizable and ideal for running in your own environment.

- Mistral AI (Mistral 7B / Mixtral 8x7B): Built for powerful performance in on-premises setups.

Running LLMs locally takes more resources: think powerful hardware, regular maintenance, and added costs. That’s why it’s important to weigh the pros and cons before going that route.

Whether you go with the cloud or stay on-premises, one thing’s clear: the LLM is a key piece of your RAG chatbot strategy.

Want to grow your AI setup later? No problem. Start small, then expand as you go.

Ingredient 3: A Powerful Search Engine

Why does a RAG chatbot need a search engine?

It’s simple: LLMs don’t know everything. They rely on the data they were trained with, which means they don’t have your company’s specific knowledge. That’s where a search engine comes in. It connects the AI to your latest and most relevant content.

A strong search engine helps the chatbot pull in up-to-date information, adapt to different user roles, and respond with precision. It’s also fast and efficient.

But not all search technologies work the same way.

Full-text search looks for exact matches. It often misses results when users phrase things differently or use synonyms.

Semantic search, on the other hand, understands the meaning behind the words. It delivers more accurate, useful answers because it interprets intent, not just keywords.

Since your data powers the chatbot’s responses, semantic search gives you a clear edge.

Ingredient 4: An Integration Platform for Control

How do you bring everything together? With the right integration platform, you can make sure all components work in sync — whether you’re building from scratch or scaling fast.

You’ve got options:

Option 1: Direct integration using code

If you want full control, coding with tools like Python or Java gives you maximum flexibility. This approach is great for developers who want to fine-tune how data flows.

Option 2: Low-code or no-code platforms

Tools like Flowise or UIPath let you build with drag & drop. No deep coding knowledge needed. It’s a fast, flexible option and perfect for teams that want to move quickly.

Ingredient 5: The Right Prompt – The Heart of the RAG Model

Even the smartest chatbot needs the right input. A strong prompt tells the AI exactly what to do and helps it give you the best answer possible.

What makes a good prompt? Three key things:

- Clear wording – Be specific so the AI understands your question.

- Relevant context – Especially when using external or company-specific data

- Defined format – Do you want a summary, a list, or a full explanation?

The better the prompt, the better the result.

Conclusion

A RAG chatbot is like a great recipe: success depends on using the right ingredients. Our advice? Start with a prototype, learn as you go, and scale up from there.

With the right setup, your RAG chatbot won’t just work. It’ll make a real difference for your business.

We get it—integrating AI into existing systems isn’t always straightforward. But that’s exactly where we come in. With experience from countless AI projects and deep know-how in data architecture and business processes, we’re here to help you build the right solution for your business.

Whether you’re just getting started or already have a plan in mind, we’ll support you every step of the way:

- Strategic consulting – We’ll help you identify the use cases that make sense for you

- Technical implementation – From connecting your data to integrating AI, we’ve got it covered

- Scaling and optimization – Your chatbot grows with your goals

Let’s turn your AI vision into something real and powerful.